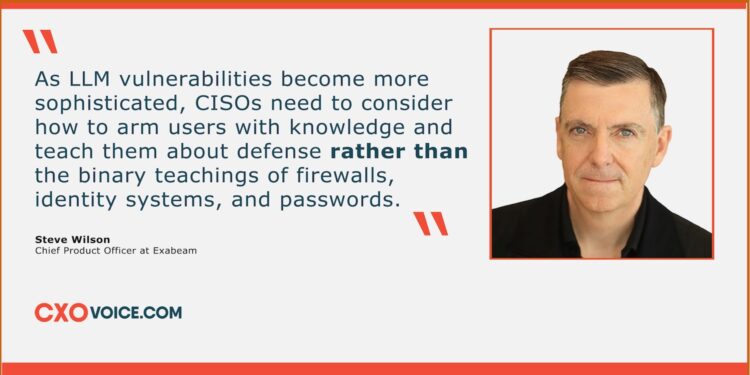

Cybersecurity is often an afterthought when it comes to the adoption of Large language models (LLMs). The rapid deployment of LLMs is bringing new security challenges with it. In an exclusive interview with Steve Wilson, Chief Product Officer of Exabeam, we discuss growing cybersecurity challenges, the use of GenAI and LLM in cybersecurity, and how businesses can defend their IT infrastructure while balancing the need for digital transformation.

Tell us about Exabeam’s AI-led cloud-native security products and their usefulness in TDIR.

At Exabeam, we’ve been using machine learning-based artificial intelligence (AI) for over ten years to enable faster and more accurate threat detection, investigation, and response (TDIR). Today, AI is a core part of our cloud-native portfolio and can be described in two parts.

One part of this portfolio is our cloud-native Exabeam Security Operations Platform, which provides advanced security information and event management (SIEM) capabilities. The Exabeam Security Operations Platform applies AI and automation to help analysts ingest, parse, store, and search data with maximized efficiency.

The second part of our portfolio is our AI analytics. Our real-time, high-performance machine learning algorithms are designed to streamline the TDIR workflow to drive greater analyst efficiency. They empower security teams with insights into what’s normal in their organization and what’s abnormal and help reduce false positives.

The goal of our AI innovation is to allow security teams to easily detect hard-to-spot threats, investigate faster, and respond with greater speed. In turn, this strengthens the overall cybersecurity posture of their organization.

How effective is GenAI in cybersecurity-related solutions?

When we look at the industry, almost every cybersecurity player is exploring use cases for generative AI (GenAI) and how its features can enhance their products.

Despite this, the feedback given on some of these GenAI-powered cybersecurity solutions is they are very general purpose and do not add meaningful value to security operations center (SOC) activities. Their answers are vague, they tend to hallucinate, and they can be slow and sometimes confusing.

From the start, our approach at Exabeam was to build our GenAI models based on real-time AI capabilities. Our advanced machine learning algorithms can rapidly analyze terabytes and petabytes of data, providing laser-focused output that delivers context to our GenAI-powered assistant, Exabeam Copilot.

In turn, our copilot is providing value by filling knowledge gaps for a large variety of organizations and users. The response since its launch this year has been positive, with customers reporting that analysts are two to three times faster at completing investigation tasks when using Exabeam Copilot. It is also our fastest adopted product release to date.

How do you see the balance between automated response and the need for human expertise in AI-led cybersecurity solutions?

AI algorithms are not just a useful tool to have in cybersecurity. In today’s digital landscape, analyzing the vast amounts of data collected by security platforms is an impossible task for analysts to perform without some kind of AI and machine learning in place.

This is where real-time machine learning algorithms can help. GenAI tools are designed to summarize complicated facts and analyze certain kinds of data. Ultimately, they generate data-driven insights, such as risk scores, to which human analysts can apply their judgment.

Whilst GenAI seems to exhibit some decision-making qualities, it is nowhere near the human level of judgment. When using these tools in a mission-critical environment, the right model is a copilot.

Deploying a copilot allows organizations to strike the right balance between AI innovation and human expertise to augment the analyst experience. This approach combines AI’s ability to analyze massive amounts of data with human insight and judgment to provide a comprehensive response to the risk.

How can developers secure LLMs and mitigate the risks associated with it?

There’s a whole list of new vulnerabilities that come up when you look at the risks associated with LLMs, such as prompt injection and data poisoning. What’s interesting is that a lot of the defenses for these vulnerabilities are not the typical cybersecurity defenses that you’d put in place for traditional automated systems.

While firewalls and identity systems are important, security teams need to remember that this is a human-like entity. Attacks from these entities can trick users into giving out sensitive information. In this case, the defenses need to be more aligned with those used within phishing attacks and should be treated more like a user rather than a traditional software component.

As LLM vulnerabilities become more sophisticated, chief information security officers (CISOs) need to consider how to arm users with knowledge and teach them about defense rather than the binary teachings of firewalls, identity systems, and passwords.

How crucial is it to address security challenges associated with LLMs? How do you see GenAI‘s future in cybersecurity?

Cybersecurity is often an afterthought when it comes to the adoption of LLMs. The rapid deployment of LLMs occurs in two main ways and is bringing new security challenges with it.

The first way is adoption from users within an organization through bring-your-own-AI or shadow IT. It’s so easy to access ChatGPT or Google Gemini that users do it without thinking about the possible security implications around this.

The second way comes from cybersecurity vendors adding LLMs to their products. A year ago, the uses for GenAI were mostly novel. It could be used to help write an essay or draft a report, but it didn’t have access to large amounts of mission-critical data.

Today, cybersecurity vendors are giving these models more and more access to highly sensitive mission-critical data. This provides them with context around the status of an organization’s networks and vulnerabilities that could be used against them.

What we’re seeing moving to the top of every CISO’s list is how to get a real strategy in place to address this challenge. Our mission-critical data is now in the hands of AI and learning to effectively manage the security of this data is critical.

Tell us about the Exabeam Copilot feature on the cybersecurity platform.

Exabeam Copilot is a collection of GenAI capabilities aimed at different use cases for accelerating the TDIR workflow. Currently, there are a number of use cases for Exabeam Copilot.

One use case is helping analysts search using their native language. Traditionally in SIEM tools, you have to learn a query language that’s usually based on something that looks like SQL. This is one of the things that prevents new analysts from getting up to speed quickly. With Exabeam Copilot, analysts can type in their native language, ask questions about the data that they’re storing, and automatically have the system search it.

Another of these use cases is threat explaining. For years, security teams have been able to get a timeline view of why a threat had been prioritized and see some of the input from low-level AI algorithms. While experienced analysts like this capability, more junior analysts take quite a while to learn the nuance of this. Exabeam Copilot solves this challenge by instantly summarizing its findings and explaining why a threat has been prioritized in plain English. Analysts can then ask follow-up questions and have an open dialogue with the system.

What role do you see LLMs playing in the future of cybersecurity, both at Exabeam and in the industry?

We are currently at the beginning of the phase where LLMs are becoming useful.

From an Exabeam perspective, Exabeam Copilot demonstrates an effective model that leverages an organization’s network data to optimize analyst efficiency. Our copilot can automate tasks, translate queries, and deliver actionable insights. This is an area we will continue to develop and add new use cases to as we focus on enabling faster, more precise TDIR.

Across the wider industry, I anticipate future LLM trends will be geared towards agent-based systems. Defenders will need to react with the same speed and agility as attackers as they adopt AI tools at an unprecedented rate. This is where automated agent-based tools come in.

I believe we’re going to see a shift where security teams find the use cases to really prove that we can trust the machines to make security-based decisions and put those decisions in the hands of the tools. For example, this could lead to agent-based systems being trusted to shut down a user account, inform the humans that it happened, and explain why this action was taken.

This shift to agent-based technologies will come over the next few years, but there’s still a lot of fundamental research to be done to enable this at scale.

What future advancements in LLM technology do you predict, and how do you see them shaping the era of AI and machine learning?

Looking at the history of digital computing, all the way back to World War Two, computers were invented to break German codes and compute artillery trajectories. We’ve been using them in a similar way ever since.

Historically, algorithms were really good at math, providing strong value within spreadsheets, data, analytics and databases. What they were not so good at was processing language.

The dramatic shift we’re now seeing is algorithms that can interact using human language, as witnessed with LLMs such as ChatGPT.

Within this field, the latest focus area for LLMs is around reasoning and decision-making abilities. We’re seeing a lot of development in this area to generate new types of training, data and algorithms to analyze responses from LLMs and find out if they’re hallucinating.

The aim is for LLMs to reason in multi-step capabilities rather than just simply predicting the next set of words in a flow. I see this being the next big research area for the technology as it evolves over the next few years.

Cyberattacks are continuously growing; how can organizations defend against cyber threats while balancing the need for digital transformation?

Cyberattacks are becoming more sophisticated, and modern cybersecurity tools are evolving quickly to keep pace. In response, CISOs ensure their security operations match the speed at which tools and attacks are advancing.

The key here is for security leaders to step away from deploying more cybersecurity tools and blindly following the latest trends. Instead, every security team should be looking at what data they have access to and how they can most effectively utilize that.

Balancing the ever-evolving threat landscape against the need for digital transformation will depend on the SOC team’s ability to make better use of its network data to make faster and more efficient security decisions.

Also Read: 67% of healthcare companies experienced ransomware attacks in 2024: Sophos