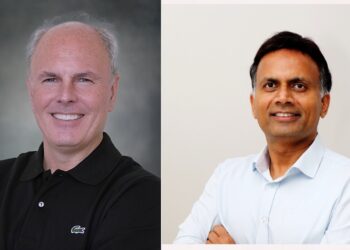

Cerebras Systems, the pioneer in accelerating artificial intelligence (AI) compute, announced its global expansion by opening a new office in India’s Bangalore. Cerebras, led by industry veteran Lakshmi Ramachandran, the new engineering office will focus on accelerating R&D efforts and supporting local customers. With a target of more than sixty engineers by year-end and more than twenty currently employed, Cerebras is looking to build its presence in Bangalore rapidly.

“India in general and Bangalore in particular, is extremely well-positioned to be a hotbed for AI innovation. It has world-leading universities, pioneering research institutions, and a large domestic enterprise market,” said Andrew Feldman, CEO, and Co-Founder of Cerebras Systems. “Cerebras is committed to being a leader in this market. Under Lakshmi’s leadership, we are rapidly hiring top-notch engineering talent for Cerebras Systems India and supporting sophisticated regional customers who are looking to do the most challenging AI work more quickly and easily.”

As part of the India expansion, Cerebras appointed Ramachandran as Head of Engineering and India Site Lead at Cerebras India. Based in Bangalore, Ramachandran brings more than 24 years of technical and leadership experience in Software and Engineering. Before joining Cerebras, she was in various engineering and leadership roles with Intel. Most recently, she was Senior Director at Intel’s Data Center and AI group, responsible for delivering key capabilities of deep learning software for AI accelerators. She has extensive experience scaling business operations and establishing technical engineering teams in India.

“I am honored to be part of Cerebras Systems’ mission to change the future of AI compute, and to work with the extraordinary team that made wafer scale compute a reality,” said Ramachandran. “We have already begun to build a world class team of top AI talent in India, and we are excited to be building core components here that are critical to the success of Cerebras’ mission. We look forward to adding more technology and engineering talent as we support the many customer opportunities we have countrywide.”

The Cerebras CS-2 is the fastest AI system in existence and it is powered by the largest processor ever built – the Cerebras Wafer-Scale Engine 2 (WSE-2), which is 56 times larger than the nearest competitor. As a result, the CS-2 delivers more AI-optimized compute cores, faster memory, and more fabric bandwidth than any other deep learning processor. It was purpose-built to accelerate deep learning workloads, reducing the time to answer by magnitude.

With a CS-2, Cerebras recently set a world record for the largest AI model trained on a single device. This is important because, with natural language processing (NLP), larger models trained with large datasets are shown to be more accurate. But traditionally, only the largest and most sophisticated technology companies had the resources and expertise to train massive models across hundreds or thousands of graphics processing units (GPU). By enabling the capability to train GPT-3 models with a single CS-2, Cerebras is enabling the entire AI ecosystem to set up and train large models in a fraction of the time.

Source: Business Wire India

(The CXOvoice may have edited only the headline of this article, the rest of the content is auto-generated from a syndicated feed.)