AMD has formally announced an expanded collaboration with HPE, unveiling a new rack-scale AI architecture, dubbed Helios, designed to meet the exploding demand for large-scale AI compute infrastructure for cloud, enterprise, and research workloads.

HPE will be among the first system providers to offer Helios-based systems to customers worldwide starting in 2026.

What is “Helios”

- Helios is a full-stack, open, rack-scale AI platform combining AMD’s compute and networking technologies including AMD EPYC CPUs, AMD Instinct GPUs, and AMD Pensando networking, along with the open-source ROCm software stack.

- The architecture is optimized for high-performance, large-scale AI workloads. According to the announcement, a single Helios rack can deliver up to 2.9 exaFLOPS of FP4 performance per rack.

- Networking is handled by a purpose-built HPE Juniper Networking “scale-up”. Ethernet switch, developed in collaboration with Broadcom.

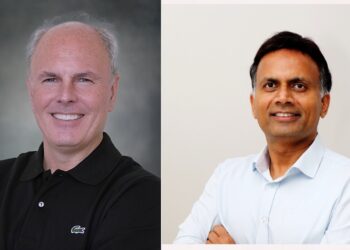

“HPE has been an exceptional long-term partner to AMD, working with us to redefine what is possible in high-performance computing,” said Dr Lisa Su, Chair and CEO, AMD. “With ‘Helios’, we’re taking that collaboration further, bringing together the full stack of AMD compute technologies and HPE’s system innovation to deliver an open, rack-scale AI platform that drives new levels of efficiency, scalability, and breakthrough performance for our customers in the AI era.”

“For more than a decade, HPE and AMD have pushed the boundaries of supercomputing, delivering multiple exascale-class systems and championing open standards that accelerate innovation,” said Antonio Neri, president and CEO at HPE. “With the introduction of the new AMD ‘Helios’ and our purpose-built HPE scale-up networking solution, we are providing our cloud service provider customers with faster deployments, greater flexibility, and reduced risk in how they scale AI computing in their businesses.”

The Helios rack is built on open standards, including the open-rack design from the Open Compute Project (OCP). That means customers avoid vendor lock-in and get interoperability, making it easier for cloud providers, enterprises, and research centres to deploy AI infrastructure at scale.

With the stated 2.9 exaFLOPS FP4 performance per rack, Helios makes training of very large models and inference at scale more feasible, supporting workloads like trillion-parameter models and high-throughput AI services.

The combination of high compute throughput, open-standards networking, and vendor collaboration lowers the barrier for organizations to deploy powerful AI clusters.

Also Read: HPE and Global Tech Leaders Form Quantum Scaling Alliance to Advance Practical Quantum Computing