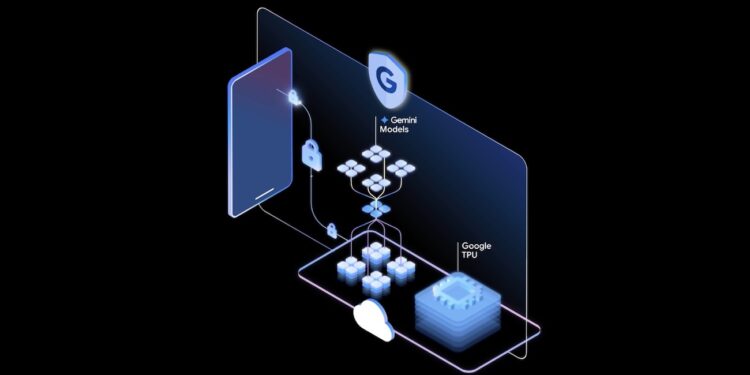

Google this week unveiled Private AI Compute, a secure cloud platform that runs its Gemini models to perform compute-heavy AI tasks for users while promising that the data those models use remains private to the user, “not even Google” can access it.

Google announced Private AI Compute on Nov. 11, 2025, as a new way to give phones and other devices access to a cloud platform with privacy guarantees. The company positions it as a hybrid: cloud horsepower with on-device-style privacy.

Google, in a blog post: Remote attestation and encryption are used to connect your device to the hardware-secured, sealed cloud environment, allowing Gemini models to securely process your data within a specialised, protected space. This ensures sensitive data processed by Private AI Compute remains accessible only to you and no one else, not even Google.

Key features and technical guardrails

- Secure enclaves & hardware attestation: Google says it uses hardware-secured enclaves and device→cloud attestation so a device can verify it is talking to a trusted compute environment.

- Powered by Google’s stack: The platform leverages Google’s cloud ML hardware (TPUs) and other internal technologies to run Gemini-class models at scale.

- “Not even Google” claim + third-party review: Google asserts it cannot access user data processed inside these sealed environments; an external assessment (reported coverage) looked for vulnerabilities and surfaced some low-risk issues that Google has addressed or contextualised. Independent verification will be a continuing focus.

Initial user experiences / product tie-ins

Google says Private AI Compute will first augment Pixel features, for example, improving the Pixel 10’s Magic Cue contextual suggestions and expanding Recorder app capabilities (multilingual summarisation and more advanced transcription features) by offloading heavier tasks to the sealed cloud. Expect the earliest examples to appear on Google’s own hardware and first-party apps.

Why does this matter now?

- Privacy + capability is the new battleground. Users want powerful AI (summaries, reasoning, multi-modal features) but increasingly insist on privacy guarantees. Apple’s PCC pushed the industry to provide a privacy-forward cloud option; Google’s move signals this is now a mainstream product strategy.

- Hybrid delivery becomes standard. Purely on-device models are constrained by hardware. Pure cloud models raise privacy concerns. Hybrid sealed-cloud solutions are emerging as the pragmatic middle path.

- Competition and differentiation. Vendors will compete on the quality of models, the strength and auditability of privacy claims, latency/UX, and how widely they open these platforms to third-party apps.

Also Read: Why Privacy-First AI is the Key to Responsible Innovation